Generative AI (GenAI) is transforming industries, but its effectiveness depends on how it incorporates and processes data. Two primary approaches to improving AI performance—Retrieval Augmented Generation (RAG) and fine-tuning—offer different benefits. While fine-tuning modifies a model’s internal knowledge by retraining it on new datasets, RAG retrieves up-to-date, external data at inference time.

DataMotion prioritizes RAG because it aligns with our core principles of security, compliance, and real-time AI accuracy. For enterprises handling sensitive information—such as financial services and healthcare providers—RAG presents a more efficient and secure method to enhance AI-driven interactions without the costly and rigid process of model fine-tuning.

Comparing RAG vs. Fine-Tuning for LLM Optimization

Both techniques require additional data, but they differ in their approach:

- Retrieval Augmented Generation (RAG): Allows AI models to access and incorporate the latest relevant data at inference time, ensuring responses are based on real-time, external knowledge rather than static, pre-trained information.

- Fine-Tuning AI Models: Retrains a large language model (LLM) on specific datasets to embed domain expertise, reducing reliance on external data and improving long-term model performance. However, this approach risks catastrophic forgetting – where older knowledge is overwritten – and requires significant computational resources and retraining every time new data is introduced.

| Factor | Retrieval-Augmented Generation (RAG) | Fine-Tuning |

|---|---|---|

| Data Source | Retrieves external knowledge in real time | Embeds knowledge directly into the model |

| Implementation Time | Faster – Relies on external knowledge base setup, which can vary in complexity | Slower – Requires dataset preparation and training |

| Cost & Maintenance | Potentially Lower – Depends on knowledge base infrastructure and query volume; No retraining needed | Higher – Requires compute resources & ongoing retraining |

| Real-Time AI Accuracy | High – Always retrieves the most recent data | Moderate – Must be retrained to stay updated |

| Enterprise AI Compliance | Higher – Data stays external, easier to audit | Lower – Embedded data must be carefully managed for compliance |

| Risk of Catastrophic Forgetting | Low – External data prevents loss of older knowledge | High – New training data can overwrite past knowledge |

| Best For | Dynamic, evolving industries (e.g., financial services, compliance-driven AI) | Well-defined, static tasks (e.g., medical research models), stylistic control |

As the table above highlights, neither RAG nor fine-tuning is a one-size-fits-all solution. Organizations should carefully evaluate their data sources, compliance needs, and specific AI use cases. While fine-tuning offers advantages for specialized tasks, particularly with small language models (SLMs), RAG often presents a more agile, cost-effective, and secure solution for regulated enterprises dealing with dynamic and sensitive data.

⇨ Want to dive deeper into Retrieval Augmented Generation? Explore our comprehensive guide to RAG.

Why DataMotion Prioritizes RAG for Enterprise AI Compliance

At DataMotion, we see RAG as the future of AI-driven enterprise solutions. Here’s why:

1. Real-Time Data Access & Compliance

Fine-tuned models struggle to keep pace with changing regulatory AI frameworks, policies, and customer account information. RAG improves AI accuracy by pulling real-time data from verified sources—critical for industries like financial services, insurance, and healthcare, where compliance and accuracy are paramount.

2. Enhanced Security & Privacy

Fine-tuning involves incorporating private or proprietary data into the language model, which raises concerns about data exposure. In regulated sectors such as finance and healthcare, RAG helps maintain the security of sensitive information by keeping it outside the model. This approach minimizes the risk of data leaks while still providing relevant and personalized responses.

3. Cost-Effectiveness & Scalability

Fine-tuning a large language model can be demanding, requiring significant computing power, time, and labeled data. On the other hand, RAG lets businesses use their current knowledge bases without the need for retraining, which means they can update information instantly and save on costs related to AI deployment.

4. AI That Evolves with Your Business

For AI to be a valuable tool in customer service, secure messaging, and workflow automation, it must adapt quickly. With RAG, AI models can retrieve the latest policies, case files, and customer data in real-time, enabling organizations to provide accurate, up-to-date responses without waiting for model updates.

When Fine-Tuning Makes Sense

While DataMotion prioritizes RAG, fine-tuning has its place in specific use cases, such as:

- Optimizing Small Language Models (SLMs): If enterprises want to deploy a lightweight AI solution for a highly specialized task, fine-tuning a smaller model can be more cost-effective to train and operate compared to using large general-purpose LLMs.

- Long-Term Customization: Fine-tuning is beneficial for static, well-defined tasks where information changes infrequently, such as medical diagnosis models trained on established research.

However, for dynamic industries that require real-time, contextual AI interactions, fine-tuning alone is not enough.

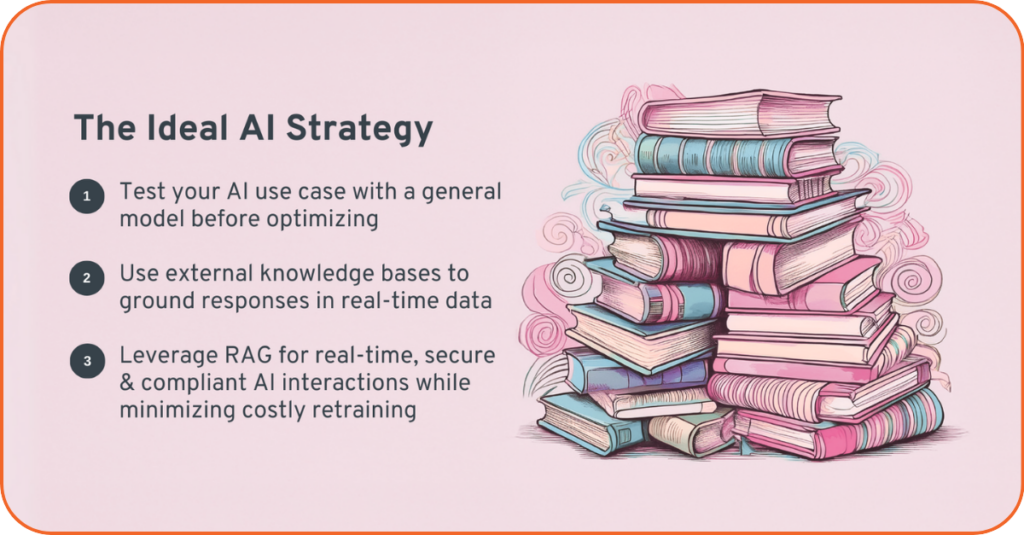

The Ideal AI Strategy: Combining RAG and Fine-Tuning

While some view RAG vs. fine-tuning as an either/or decision, the most efficient AI strategy often combines both approaches:

- Start with a large LLM and strong prompting: Test your AI use case with a general model before optimizing.

- Implement RAG to improve accuracy: Use external knowledge bases to ground responses in real-time data.

- Use fine-tuning selectively: Optimize smaller models for specific, cost-sensitive tasks while relying on RAG for dynamic knowledge retrieval.

Use Case: AI-Powered Customer Support in Financial Services

A financial services firm is deploying an AI-driven customer support assistant to handle customer transactions, fraud alerts, and compliance inquiries while ensuring security and accuracy.

- RAG enhances real-time AI accuracy by retrieving up-to-date customer data, fraud prevention policies, and compliance regulations at the time of the query—ensuring responses reflect the latest information without requiring extensive model updates.

- Smart forms integrated into your RAG data source allows users to quickly access, populate, and submit necessary information and documents—reducing errors, improving compliance, and streamlining financial workflows.

This RAG-powered approach, combined with secure AI-driven automation, delivers efficient, real-time customer support, helping financial institutions reduce agent workload, improve customer satisfaction, and maintain compliance without sacrificing security.

How DataMotion Uses RAG to Drive Secure AI Innovation

At DataMotion, we embed AI-powered solutions, including JenAI Assist™, to enhance secure, compliant, and real-time AI interactions. Our approach integrates:

- Vector embedding with Azure to enable efficient data retrieval.

- Azure Storage for scalable and secure data management.

- Flexible LLM integration, allowing customers to use any AI model through Azure or bring their own model.

- DataMotion tagging and tooling to refine responses and seamlessly connect AI to other platform-level tools.

This approach empowers our customers by enabling them to:

- Receive interaction-specific responses that adapt dynamically to their needs.

- Engage with AI-driven insights while keeping sensitive data private.

- Interact with AI-powered agents for seamless automation and effortlessly escalate to human support when needed.

- Maintain secure, compliant AI-driven messaging with enterprise-grade encryption.

- Retrieve real-time customer data while ensuring privacy and governance.

- Integrate AI seamlessly into workflows without the need for costly model retraining.

By prioritizing RAG, DataMotion enables organizations to harness AI for customer interactions and workflow automation while ensuring secure communications, regulatory compliance, and accelerated ROI. Our agile, adaptable AI solutions help businesses react quickly to evolving needs—without sacrificing security or efficiency.

Common Questions About RAG and Fine-Tuning

Q1: What is Retrieval Augmented Generation (RAG)?

📌 Retrieval Augmented Generation (RAG) is an AI technique that enhances Large Language Models (LLMs) by retrieving real-time, external data at inference time. Unlike fine-tuning, RAG allows AI models to access up-to-date knowledge without retraining, making it ideal for dynamic industries like financial services and healthcare.

⇨ Learn more about how Retrieval Augmented Generation integrates with LLMs to enhance real-time data retrieval and response generation.

Q2: What is Fine-Tuning?

📌 Fine-tuning is a machine-learning technique that modifies an LLM by training it on domain-specific data. It helps embed industry expertise directly into the model but requires continuous retraining as data evolves. While fine-tuning improves specialized knowledge, it risks catastrophic forgetting and is costly to maintain compared to retrieval-based approaches like RAG.

Q3: What is the difference between fine-tuning and RAG?

📌 Fine-tuning modifies a model’s internal knowledge by retraining it with domain-specific data, making it more specialized over time. RAG, on the other hand, retrieves external information when generating responses, ensuring AI uses the most current and relevant data without needing expensive retraining cycles.

Q4: Is RAG better than fine-tuning for secure AI applications?

📌 RAG offers significant advantages for secure AI applications because it does not embed sensitive data directly into the model. Instead, it retrieves relevant data at query time, reducing risks of data leaks and ensuring compliance with security and privacy regulations.

Q5: When should you fine-tune a model instead of using RAG?

📌 Fine-tuning is useful when developing a highly specialized AI model that performs a specific, unchanging task, such as analyzing structured medical data. However, for AI applications requiring real-time, accurate, and secure data—such as customer interactions or financial queries—RAG is the preferred approach.

Unlock the Future of AI with RAG

The ability to retrieve, verify, and incorporate the latest information in real-time makes RAG the superior choice for AI-driven enterprise solutions. While fine-tuning has its place, the future of scalable, secure, and cost-effective AI lies in RAG-powered architectures.

To learn more about how DataMotion leverages RAG to enhance AI-driven communications, explore JenAI Assist™, subscribe to our newsletter, or schedule a demo today.